One of the most intriguing applications of GitHub Copilot is its potential to assist in code reviews.

In this tutorial, we’ll explore how Copilot helps you understand code, suggest improvements,

explain code, and answer specific questions about the code under review.

You’ll learn two main ways to use Copilot:

- Copilot in the IDE (Visual Studio Code)

- Copilot on GitHub for reviewing pull requests (currently in beta).

Along the way, I’ll share my thoughts on the

use cases and workflows for AI-assisted code reviews,

as well as the weaknesses of this approach.

While GitHub Copilot can transform how we conduct code reviews, we will see that

human oversight remains essential throughout the process.

GitHub Copilot

GitHub Copilot is a coding assistant that uses large

language models (LLMs) under the hood.

GitHub Copilot is a coding assistant powered by large language models (LLMs).

It integrates seamlessly with various integrated development environments (IDEs),

providing developers with real-time suggestions, code completions, and review functionality.

Recently, Copilot has also become available (in beta) directly on GitHub,

where it assists developers in reviewing pull requests (PRs).

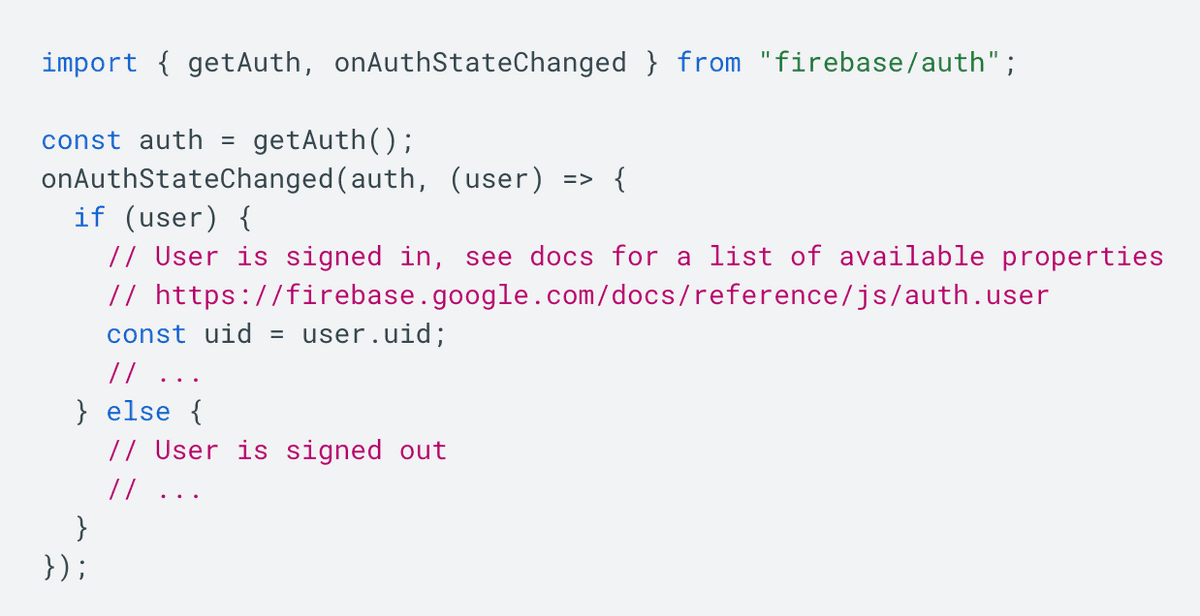

Code Under Review

In this tutorial, you’ll explore how to use GitHub Copilot to review code effectively.

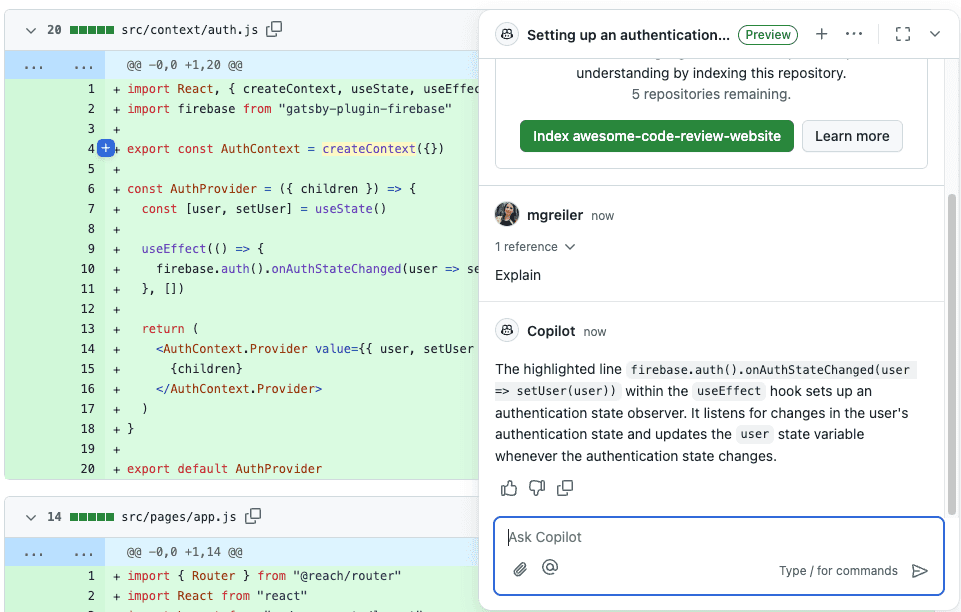

Let’s start with a snippet of code from a file named auth.js.

Below, you’ll find the first code sample we’ll review.

You don’t need to analyze the code in detail right now, but take a quick look.

Do you notice any opportunities for improvement?

import React, { createContext, useState, useEffect } from "react"

import firebase from "gatsby-plugin-firebase"

export const AuthContext = createContext({})

const AuthProvider = ({ children }) => {

const [user, setUser] = useState()

useEffect(() => {

firebase.auth().onAuthStateChanged(user => setUser(user))

}, [])

return (

<AuthContext.Provider value={{ user, setUser }}>

{children}

</AuthContext.Provider>

)

}

export default AuthProviderCopilot in the IDE

As mentioned earlier, GitHub Copilot is available in IDEs and on GitHub itself.

We’ll begin by reviewing this piece of code within the IDE and later compare it to the review experience on GitHub in the PR view.

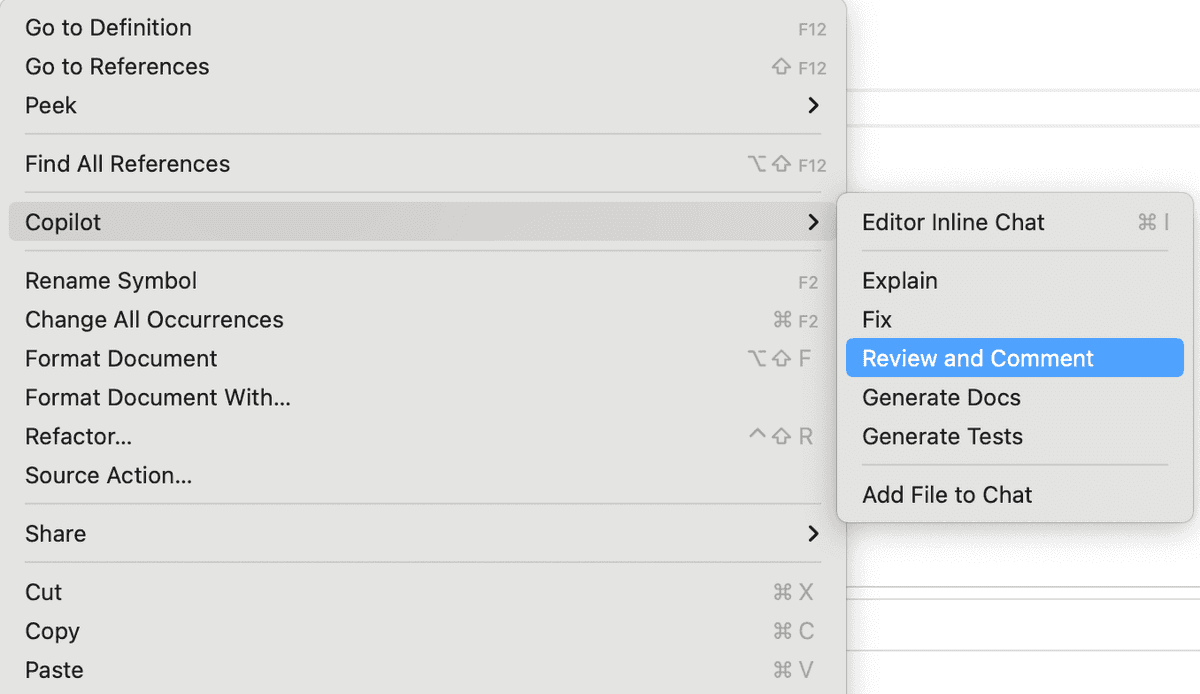

In Visual Studio Code, a small context menu provides access to Copilot’s AI-powered capabilities.

This menu allows you to Explain, Fix, or start a chat (Add File to Chat) about a specific piece of code.

You can also use it to generate documentation or tests with the help of AI.

Recently, Copilot introduced a Review and Comment option for reviewing code directly in the IDE, which is what you’ll use today to get started.

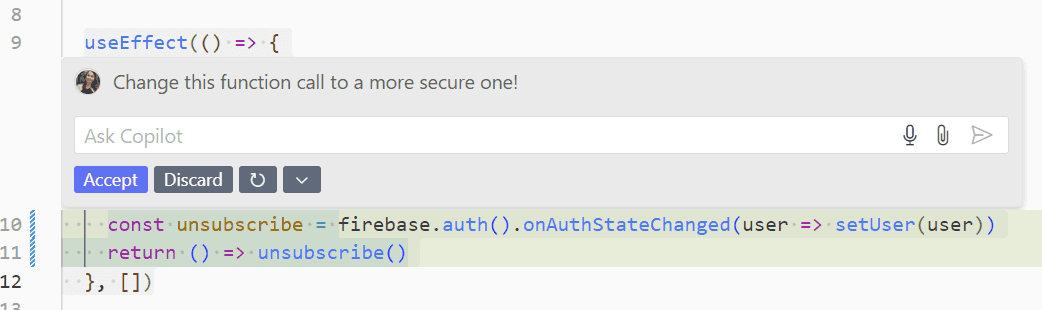

Review and Comment

Previously, the primary option for reviewing code with GitHub Copilot was to start a chat with the LLM. This was particularly useful when you wanted an exploratory and interactive approach to understanding and improving your code.

Now, with the Review and Comment option, you have access to a more

direct, guided, and structured way to review your code.

To start a guided review, select the parts of the code you want to examine

and ask GitHub Copilot to Review and Comment.

Copilot then opens a review interface that highlights suggested changes

line by line within the file under review.

This interface resembles tools commonly used for refactoring or

resolving merge conflicts, making it intuitive for developers.

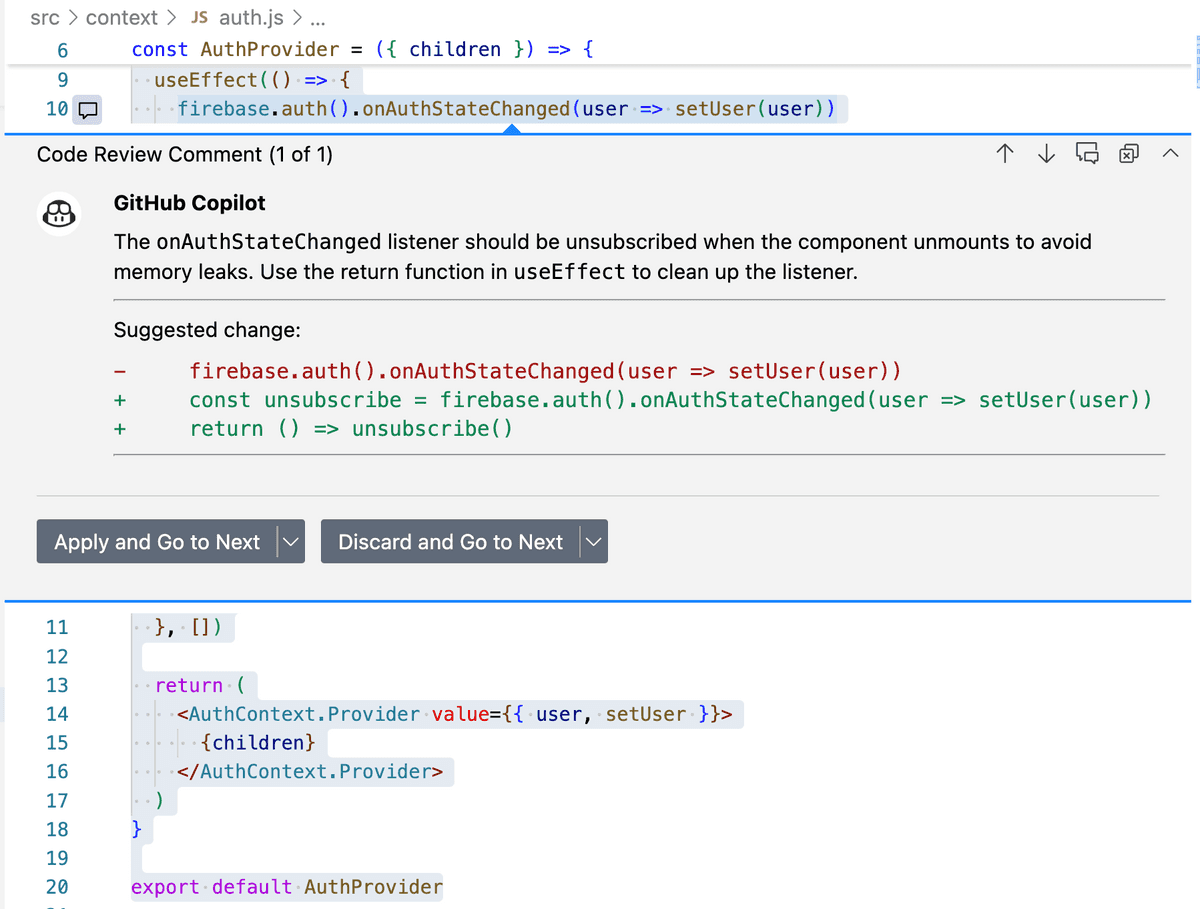

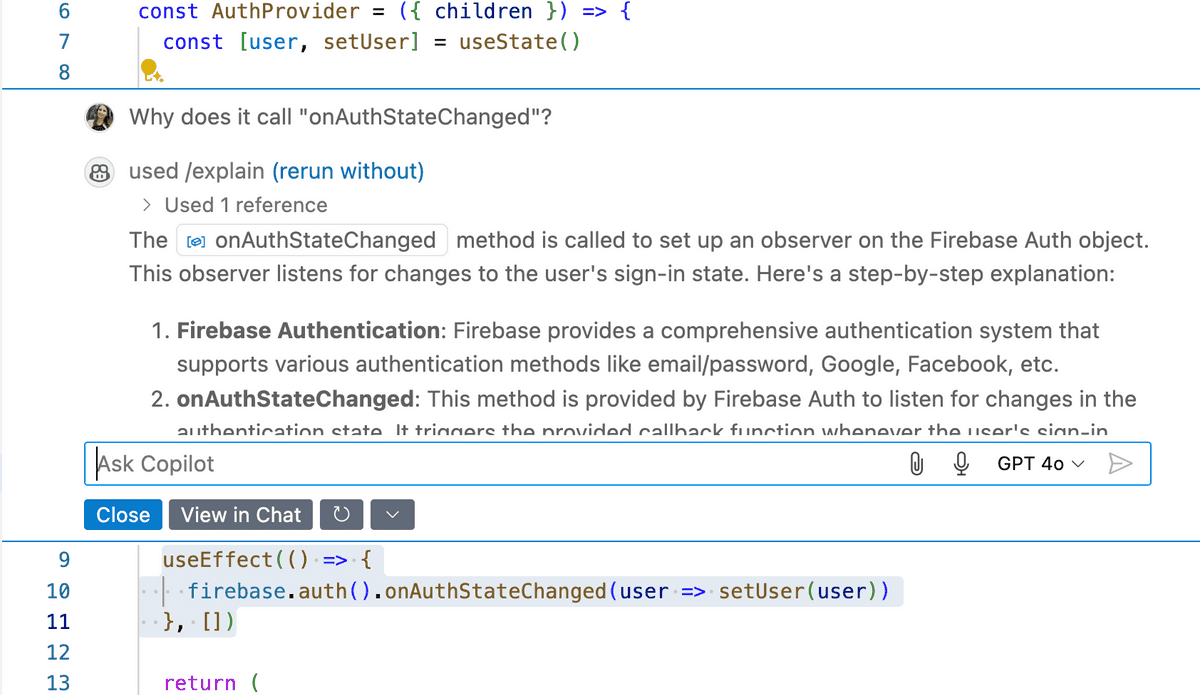

Here’s an example of the interface in action while reviewing

the auth.js file in the IDE:

Line by Line Review

The AI suggested code change is embedded directly in the code. Copilot

injects the review interface just below line 10, which is the main line of

code this code review comment addresses.

The review interface highlights the original lines in red and the

suggested changes in green, following a familiar diff-style format.

You can either accept the changes or discard them and move to the next suggestion.

What stands out to me is that Copilot also provides a brief explanation of the

reasoning behind each suggestion, making it easier to understand

the proposed improvements.

However, one usability issue I’ve encountered is that accepting a change

automatically moves you to the next one.

This behavior means you can’t review or verify the outcome of the change

Copilot applied.

I’d prefer an option to accept the change and stay on the same line

(e.g., line 10) to inspect the result before proceeding.

Overall, the Review and Comment functionality is straightforward and enables a highly structured approach to code review, aligning well with automated code review practices.

Let Copilot Explain Code

Another way Copilot can assist in a review is by explaining the code.

This feature helps you build a mental model of the code changes, improving

your ability to provide meaningful feedback during the review process.

To prompt Copilot for an explanation, select the code and right-click to

open the context menu. Then, click Explain.

The executed prompt is: “@workspace /explain Write an explanation for the active selection as paragraphs of text.”

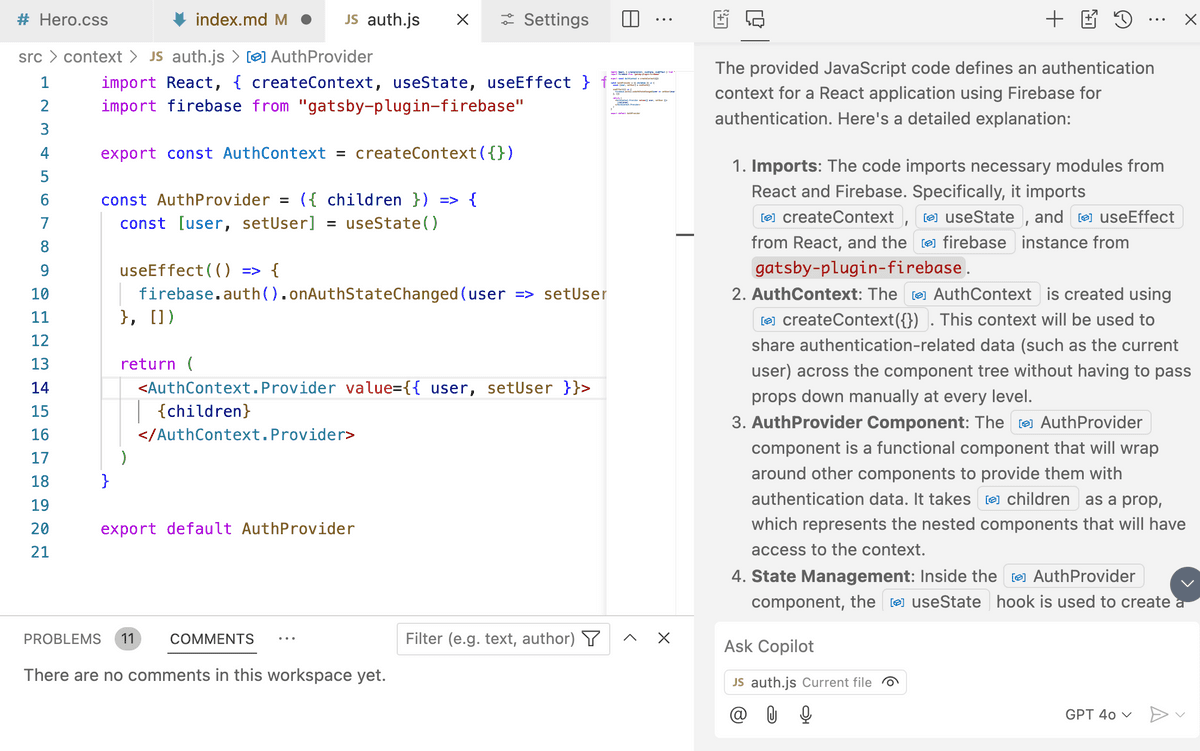

This prompt triggers GitHub Copilot to generate a detailed explanation

of the selected code, as shown in the screenshot below.

The explanation highlights objects, components, and function calls,

making it easier for developers to associate the description with the code itself.

Additionally, functions are clickable, linking you directly to the file

where they are defined.

After the explanation, a chat interface appears, allowing you to interact further with the LLM about the code.

This chat interface is also a great starting point when you want a more exploratory experience with the LLM and the code.

Inline Chat with Copilot

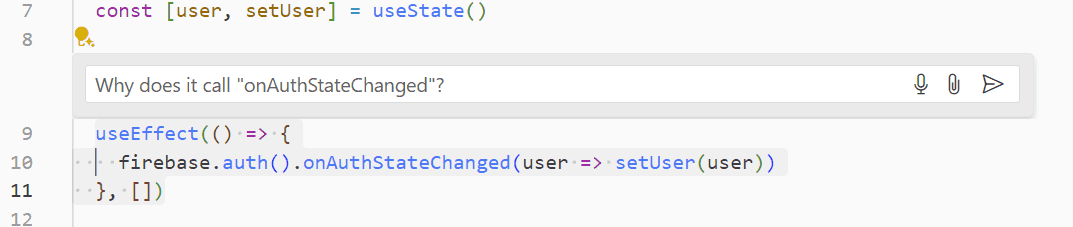

Another way to interact with GitHub Copilot in the IDE is by using the inline chat (Editor Inline Chat) interface, which provides explanations directly within the file view.

One challenge with inline prompts is that the chat window is quite small,

while the explanations or suggestions are often lengthy.

This results in extensive scrolling, which can feel cumbersome and

less user-friendly, as shown in the screenshot below.

Fortunately, you can prompt Copilot to View in Chat, which opens the conversation in a larger, dedicated window (in my case, on the right-hand side).

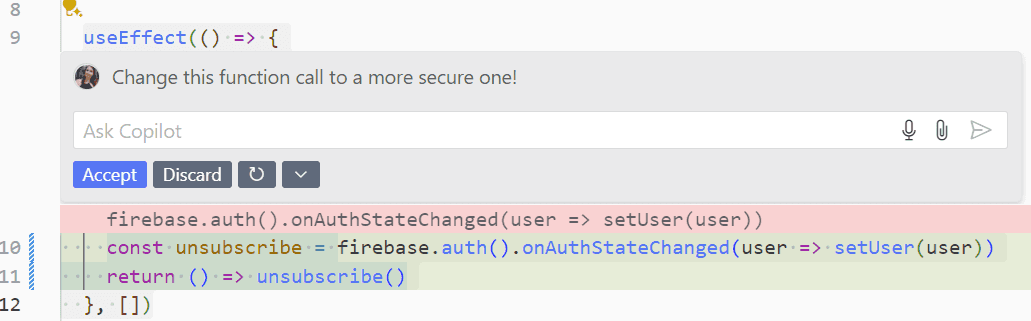

Asking Copilot for Changes

The inline interface is particularly effective for prompting Copilot to make changes.

For example, you can ask Copilot, “Change this function call to a more secure one.”

It will then present you with suggested changes, though without

much explanation by default.

However, the interface initially only displays the newly generated code,

not the original code.

This can make it difficult to compare and evaluate whether the suggested

change is truly helpful.

To address this, you can enable the Toggle Changes option, which shows a side-by-side comparison of the original and new code—similar to what many developers are accustomed to in code reviews.

If you’re unsure about the suggested changes, you can also ask Copilot to explain its suggestions, adding clarity and helping you assess their value.

Chatting with Copilot About the Code

One of the most common ways LLMs assist developers today is through

“traditional” chat interfaces.

In this interface, developers can ask the LLMs questions about the

code or explore it interactively.

GitHub Copilot also offers this method of interaction, providing

a versatile way to engage with the code.

The inline chat we discussed earlier is another representation of

this chat interface.

Similarly, the Explain option brings you directly into this

interface with a prefilled standardized prompt, enabling a seamless

transition between exploration and explanation.

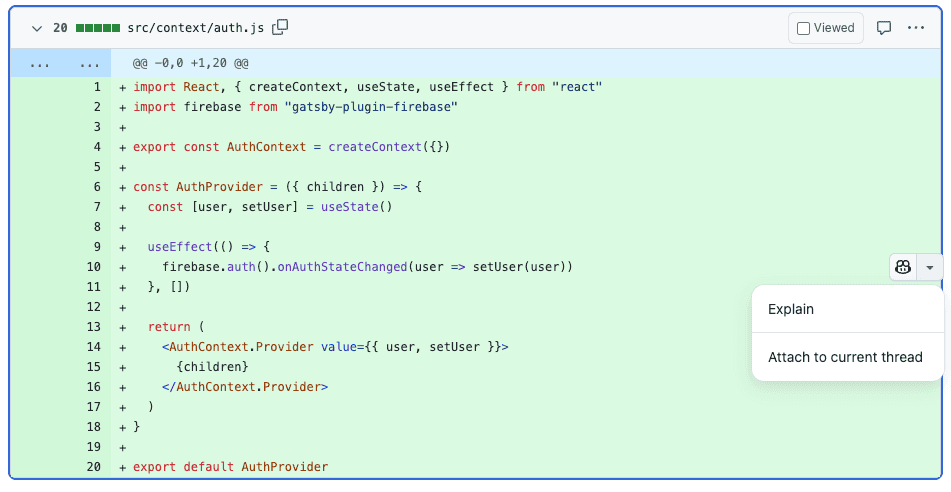

Copilot to Review PRs on GitHub

The GitHub Copilot experience for pull request reviews is currently similar

to the chat functionality in the IDE or tools like ChatGPT on the web.

However, it provides a less directed and structured approach compared to

the Explain and Comment interface available for automated code reviews

in the IDE.

There are several ways to trigger Copilot directly on GitHub during a PR review.

For instance, you can click on a line of code, which opens a small

Copilot context menu.

From there, you can select options such as Explain or

Attach to current thread to add Copilot’s response to an existing conversation.

AI Code Explanation for Code Review

When you click Explain, it triggers a pop-up window that resembles the familiar chat interface.

Using AI to explain code is an excellent use case

during a code review.

It helps the reviewer understand the code more effectively

and build a mental model of the changes.

However, this feature does not provide improvement suggestions

from Copilot, as shown in the example below.

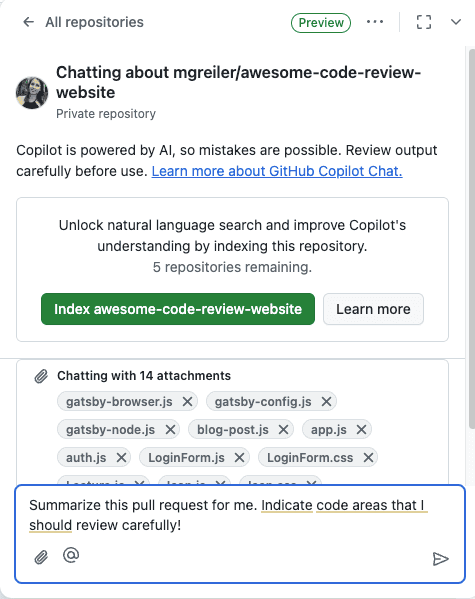

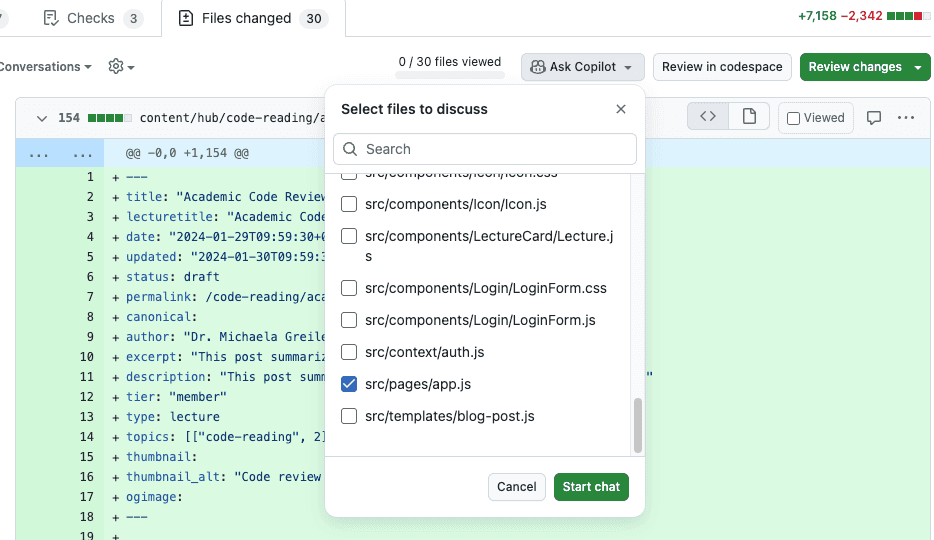

Generating a PR Summary from Multiple Files

Another way to trigger GitHub Copilot is through the menu located above all the file changes, as shown in the screenshot below. This option allows you to select multiple files from the change set and start a chat with Copilot about them.

One practical use case is to have AI automatically summarize the pull request for us. While there are already tools and plugins available that offer automatic PR summarization, GitHub now provides this functionality out of the box.

To try it out, I selected all source code files changed in this PR, along with

relevant configuration files. I omitted content-related files such as markdown

files, as well as certain configuration or generated files like package.json.

The auth.js file is part of a rather large PR, as shown below. I ended up

adding 14 source code files the context of Copilot.

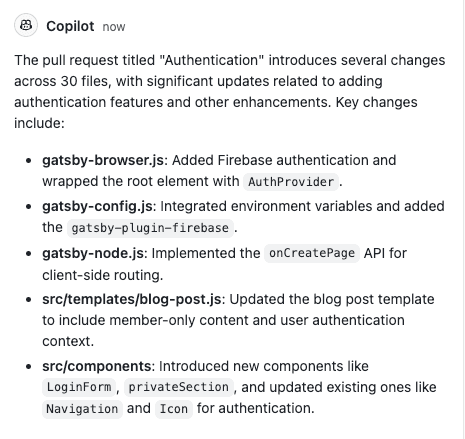

For the sheer amount of code changes, Copilot’s automated code review summary is surprisingly short and lacks meaningful insights. It primarily lists some of source code files included in the change set, grouping them together logically.

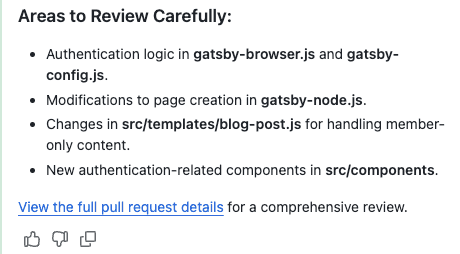

I also asked Copilot to indicate where I should focus my attention during the

code review. While it provided an answer, I found it underwhelming. The response

listed a few files but offered no explanation for why these files were highlighted.

Additionally, the auth.js file—one that I, as a human reviewer, consider highly

relevant—was notably absent from the list.

I am disappointed with both responses. Perhaps my prompt wasn’t clear or specific enough. To address this, I tried again with the prompt: “Summarize the PR in more detail.” Copilot processed the request but returned the same short and unhelpful summary as before. Bummer.

Next, I sent another prompt focused on risk assessment: “What are the most risky changes in this PR?” Once again, Copilot took its time to create the plan, but the answer was almost identical to the previous response. Unfortunately, it offered little insight into the PR, and the risk assessment did not seem appropriate or actionable.

Don’t use AI for Risk Assessment

A chat interface is a powerful tool, letting you ask any question or provide any instruction you want to the LLM. While this flexibility is valuable, it doesn’t prevent us from making unwise requests. For example, asking an automated code reviewer to assess the risk of a pull request is one such misstep.

LLMs are fundamentally unsuited for assessing risk in source code. They lack the deep understanding of domain-specific logic, semantic nuances, and file interdependencies required for accurate analysis. Risk often arises from how files interact or their role within the system. These are all factors LLMs are incapable of dynamically evaluating.

LLMs treat all input equally, making it hard for them to prioritize files based on critical factors like functionality, or security and business relevance. Without domain-specific knowledge or explicit input, LLMs cannot evaluate the importance or business-critical nature of a file.

As these models often respond regardless of their suitability for the task, it is crucial to critically evaluate whether this type of AI is appropriate for the specific task at hand.

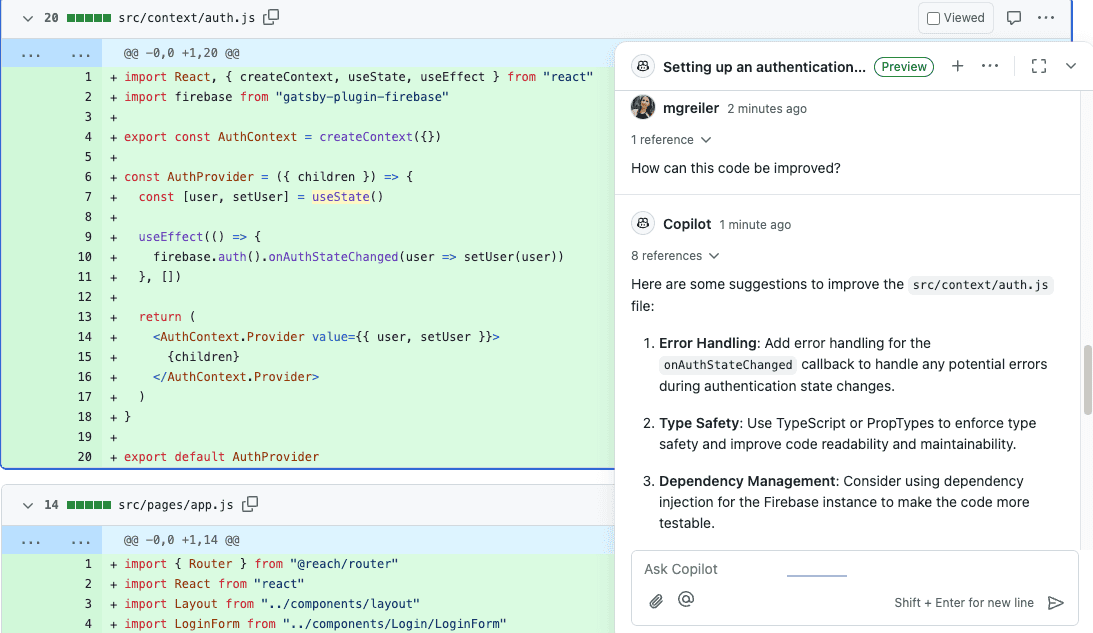

Asking Copilot for Code Improvements

The experience of interacting with Copilot on files may feel smoother when focusing

on a single file. For instance, let’s choose only auth.js. Once selected,

the chat window opens, and Copilot is ready to assist by responding to your questions

or following your instructions.

Let’s ask Copilot: “How can this code be improved?” and review its response.

When you prompt Copilot, the page reloads. If you were examining a specific change in the long list of PR changes, you’ll find yourself back at the beginning of the list. This behavior can be frustrating and unhelpful, as it causes you to lose the context of the code you just reviewed and sought assistance for.

Another negative UX aspect is that the chat window is small, requiring significant scrolling to read Copilot’s full response. Unlike the IDE experience, there is no option to open the conversation in a larger, more convenient window.

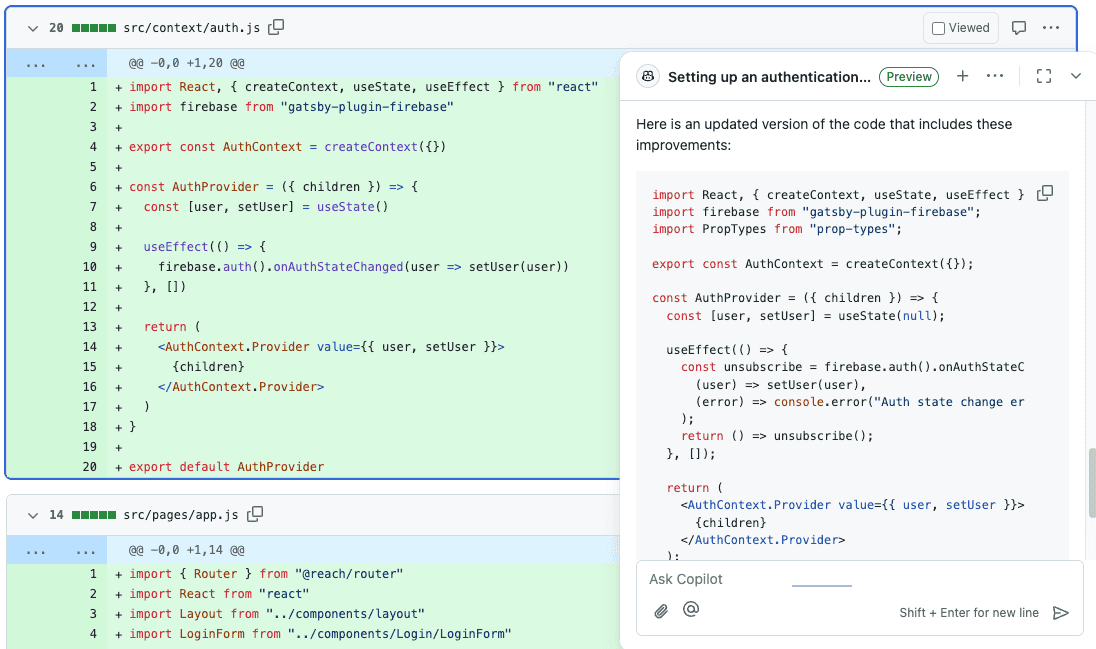

Copilot’s response is detailed and starts with a list of improvement suggestions, including enhancements for error handling, type safety, and dependency management.

When we scroll down, our personal automated code reviewer, Copilot, provides an

updated version of the code that you can copy and paste.

However, during a code review, the ability to copy and paste the

entire updated code is not particularly useful.

What’s more important is mapping the new code back to the code under

review, identifying the specific lines that were changed,

and determining where to leave comments.

Since the piece of code under review is relatively small, it’s manageable

to map the updated code in the chat window to the corresponding lines

in the diff view.

If you find Copilot’s suggestion useful, you can add a comment or make

a concrete code change suggestion directly in the PR.

However, for non-trivial changes, I personally feel uneasy making

edits this way.

For anything beyond a simple adjustment, I prefer to make the changes

within the IDE, where I can immediately verify if I’ve made a

mistake (maybe even just a typo, or copy/paste error).

This ensures a smoother and more reliable workflow, particularly for complex updates.

Should We Ask AI to Review a PR?

The idea of a peer reviewing code already submitted as a PR by

prompting an LLM to improve it feels rather strange.

Ideally, the process of prompting AI to refine code should be driven by

the developer who wrote the code and should occur in the IDE before the

PR is submitted for peer review.

This use case, or a self-review is nicely covered by Copilot in the IDE.

On the other hand, using AI to explain code during a PR review

is a valuable and practical use case.

Similarly, if a reviewer has a suspicion or an improvement suggestion,

interacting with the LLM can help clarify their thoughts.

In such scenarios, it’s unlikely that the reviewer would use

a prompt as general as the one in this example.

More specific prompts tend to yield better results.

A code review checklist

can provide inspiration for crafting effective code review prompts.

In most cases, reviewers will have a concrete question or suspicion about the code and will ask the AI code reviewer for targeted feedback directly.

AI Helps Reviewers Verify Their Suggestions

One of the most significant benefits AI offers to code reviewers is helping them build a mental model of the changes by explaining the code and providing feedback on their suggestions.

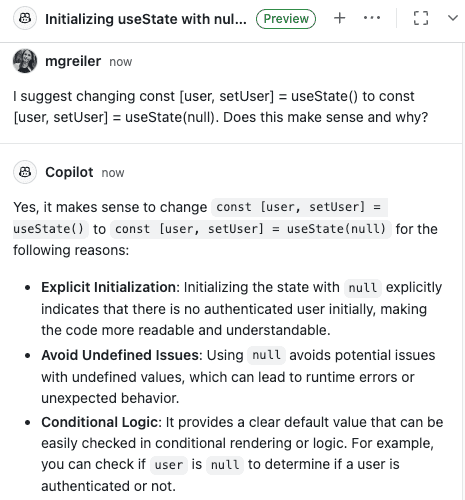

For example, let’s imagine we’re reviewing the code and notice:

const [user, setUser] = useState().

We think it might be better to change this to:

const [user, setUser] = useState(null).

Yet, feel uncertain and insecure about making the suggestion. What if we are wrong? Insecurity during code reviews, and even anxiety, is a common challenge. This is where AI can help us investigate our idea.

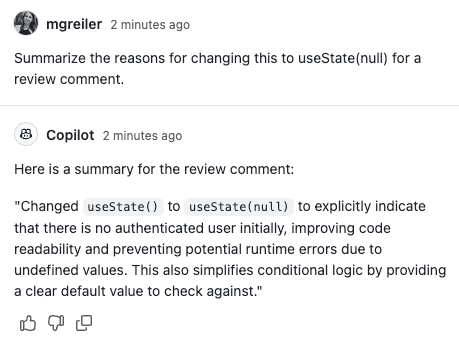

Let’s ask Copilot if our suggestion makes sense and why.

Copilot provides a detailed explanation with three reasons why

initializing useState() with null is a better approach. We can take

it a step further and ask our AI code review helper to summarize this

explanation, making it easier to use as a concise review comment.

AI Trustworthiness Is a Problem

One of the biggest challenges with using LLMs for code reviews is the trustworthiness of their suggestions. Although it might seem like AI understands or is intelligent, this is not the case.

The code suggestions provided by Copilot, or any other automated code review tool

that uses LLMs, rely purely on statistical relationships. The LLMs are designed

to predict the next most likely token, resulting in outputs that appear plausible.

However, even though the output may seem correct, it can sometimes be entirely wrong.

I’ve personally observed AI fabricating API calls that don’t exist, suggesting catching exceptions from libraries not in use, and don’t get me started on the suggestions I received for improving CSS code. Even while working on this tutorial, there were moments when Copilot generated incorrect or incomplete code, as shown in the appendix.

While some of the AI-generated code is correct, the potential for errors is too significant to ignore, especially during a code review. A code review is not the right place to take chances with unverified suggestions. This is why it’s critical to thoroughly evaluate any AI-generated code review feedback before acting on it.

Evaluating AI Code Review Suggestions

An LLM can be a valuable companion during a code review, especially when you need it to remind you of best practices or highlight potential issues in the code. However, it’s crucial that you have the competency to evaluate the proposed changes critically (and quickly).

For developers who are less familiar with a framework or programming language, relying on AI for code reviews can be tempting. Yet, the less skilled you are at writing or improving the code yourself, the harder it becomes to assess the validity of the AI’s code review suggestions.

Research indicates that even

experienced developers struggle

to fully understand, modify, or debug code generated by LLMs.

As a result, evaluating AI-generated code review

suggestions—some of which can be entirely wrong—remains a challenging task.

Evaluating Our AI Code Review Suggestion

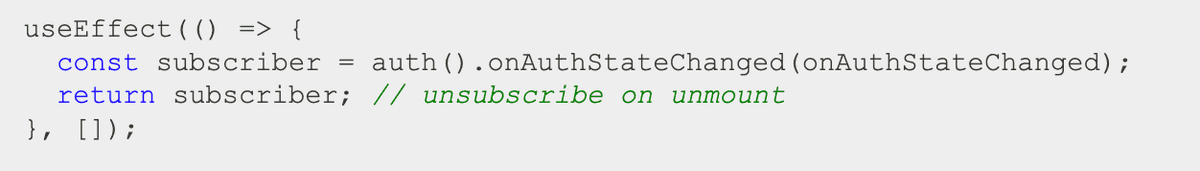

Let’s examine Copilot’s recommendation to modify the line of code where

we call onAuthStateChanged. Our automated code reviewer suggested using

a pattern to ensure that any subscriptions or side effects are properly

cleaned up when a component is unmounted or before the effect is re-executed.

However, if we’re not familiar with React or the Firebase API, it can be

difficult to determine whether this pattern is applicable in this context.

If we refer to the official Firebase documentation, we notice that the code snippets provided there do not use this pattern. This omission could also explain why it’s not present in the existing codebase.

After reviewing the proposed solution, the reviewer might feel even more uncertain:

- Is this a valid pattern?

- Should it be applied in our case?

- Why wasn’t it included in the official documentation?

If the reviewer is unsure about the AI-generated suggestion, there are three possible approaches:

- Trust the AI and recommend the change anyway.

- Stay cautious and decide against recommending the change.

- Dig deeper by conducting further research to arrive at a conclusion.

Option one is not recommended, as it assumes AI is infallible. Option two is a safe choice but may overlook opportunities for improvement. Option three can lead to valuable learning and potentially improve the codebase quality, though it requires more time and effort.

In our case, we chose the third option and found additional documentation on a Firebase-related website. This resource indicates that a listener is returned and can, or should, be used for unmounting.

Does this evidence provide enough justification to propose the change?

Or should we involve a human/colleague to review the AI-based code suggestion?

😜

AI Code Review Belongs in the IDE

When an automated code review is conducted in the IDE, the process of iteratively evaluating AI suggestions becomes a natural part of the development workflow. AI-assisted code reviews are an excellent tool for self-review, allowing developers to inspect and improve their own code before involving others. There’s no need to include a peer in this semi-automated code review process—unless during an AI pair programming session.

In the IDE, developers can thoroughly modify, enhance, and test the AI code review suggestions. Yet, by the time the code reaches the code review stage, the developer should have already leveraged AI to assess and refine their solution.

Once a code review begins, the stakes for changing the code based on AI suggestions increase significantly, while the reviewer’s ability to evaluate the suggestion diminishes.

AI Code Reviews Can Be Time-Consuming

While Copilot produced straightforward and sensible suggestions for our code in this tutorial, this is not always the case. Using AI for code reviews can lead to incorrect suggestions, as discussed before, but also to many recommendations that are irrelevant or don’t apply in the given context.

This challenge is similar to the struggle developers face when using

static analysis tools

for automated code reviews. These tools often

generate numerous false positives, which means they are reporting

issues that aren’t actual problems.

In contrast, LLMs add another layer of complexity because they

are non-deterministic, meaning they can produce different outputs

for the same input.

Sifting through irrelevant or inconsistent suggestions is a time-consuming task, making it essential to critically evaluate the AI’s output during a code review, but also to question it’s usefulness and efficiency.

Conclusion: AI Code Reviews Need Human Supervision

Despite advancements in AI, the human element remains irreplaceable in code reviews. There is no such thing as a fully automated code review yet.

AI-generated suggestions can sometimes be terribly incorrect,

making code reviews the wrong place to take these risks.

While tools like Copilot are excellent companions in the IDE,

they are still assisting technologies rather than autonomous code reviewers.

That said, don’t underestimate the power of AI assistance. An AI reviewer can help developers focus on details, detect errors, improve code quality, handle repetitive tasks, and help them build a mental model of the code.

By combining human expertise with AI capabilities, we can create a

balanced approach that leverages the strengths of both.

As AI continues to evolve, its role in code reviews will likely expand,

but the need for human oversight will remain critical for a long time.

What’s your experience with AI code reviews? Let me know in the comment section on LinkedIn!

Appendix: Code Generated by Copilot on GitHub

Here’s the complete, improved code snippet that Copilot suggested during our automated code review session.

What do you think?

AI Improved Code

import React, { createContext, useState, useEffect } from "react";

import firebase from "gatsby-plugin-firebase";

import PropTypes from "prop-types";

export const AuthContext = createContext({});

const AuthProvider = ({ children }) => {

const [user, setUser] = useState(null);

useEffect(() => {

const unsubscribe = firebase.auth().onAuthStateChanged(

(user) => setUser(user),

(error) => console.error("Auth state change error:", error)

);

return () => unsubscribe();

}, []);

return (

<AuthContext.Provider value={{ user, setUser }}>

{children}

</AuthContext.Provider>

);

};

AuthProvider.propTypes = {

children: PropTypes.node.isRequired,

};

export default AuthProvider;Code Explanation from Copilot

The code snippet is a React useEffect hook that listens for changes in the

authentication state using Firebase. Here’s a step-by-step explanation:

-

useEffect Hook: This hook runs a piece of code after the component renders. The empty dependency array [] means it runs only once when the component mounts.

-

firebase.auth().onAuthStateChanged: This is a Firebase method that sets up an observer on the Auth object to listen for changes in the user’s sign-in state.

-

Callback Function: The callback function user => setUser(user) is called whenever the authentication state changes. It updates the state with the current user object.

-

Cleanup Function: The return () => unsubscribe() part ensures that the observer is unsubscribed when the component unmounts, preventing memory leaks.

Copilot's Incorrect Code Suggestions

Below is an “improved version of the code”, as Copilot calls it.

Can you spot the issue with the proposed code?

import { useEffect } from 'react';

import firebase from 'firebase/app';

import 'firebase/auth';

const useAuth = (setUser) => {

useEffect(() => {

const unsubscribe = firebase.auth().onAuthStateChanged(

user => setUser(user),

error => console.error('Auth state change error:', error)

);

return () => unsubscribe();

}, [setUser]);

};

export default useAuth;